Nebius Group NV

NASDAQ:NBIS

| US |

|

Johnson & Johnson

NYSE:JNJ

|

Pharmaceuticals

|

| US |

|

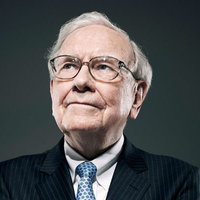

Berkshire Hathaway Inc

NYSE:BRK.A

|

Financial Services

|

| US |

|

Bank of America Corp

NYSE:BAC

|

Banking

|

| US |

|

Mastercard Inc

NYSE:MA

|

Technology

|

| US |

|

UnitedHealth Group Inc

NYSE:UNH

|

Health Care

|

| US |

|

Exxon Mobil Corp

NYSE:XOM

|

Energy

|

| US |

|

Pfizer Inc

NYSE:PFE

|

Pharmaceuticals

|

| US |

|

Palantir Technologies Inc

NYSE:PLTR

|

Technology

|

| US |

|

Nike Inc

NYSE:NKE

|

Textiles, Apparel & Luxury Goods

|

| US |

|

Visa Inc

NYSE:V

|

Technology

|

| CN |

|

Alibaba Group Holding Ltd

NYSE:BABA

|

Retail

|

| US |

|

JPMorgan Chase & Co

NYSE:JPM

|

Banking

|

| US |

|

Coca-Cola Co

NYSE:KO

|

Beverages

|

| US |

|

Walmart Inc

NYSE:WMT

|

Retail

|

| US |

|

Verizon Communications Inc

NYSE:VZ

|

Telecommunication

|

| US |

|

Chevron Corp

NYSE:CVX

|

Energy

|

Utilize notes to systematically review your investment decisions. By reflecting on past outcomes, you can discern effective strategies and identify those that underperformed. This continuous feedback loop enables you to adapt and refine your approach, optimizing for future success.

Each note serves as a learning point, offering insights into your decision-making processes. Over time, you'll accumulate a personalized database of knowledge, enhancing your ability to make informed decisions quickly and effectively.

With a comprehensive record of your investment history at your fingertips, you can compare current opportunities against past experiences. This not only bolsters your confidence but also ensures that each decision is grounded in a well-documented rationale.

Do you really want to delete this note?

This action cannot be undone.

| 52 Week Range |

20.06

135.46

|

| Price Target |

|

We'll email you a reminder when the closing price reaches USD.

Choose the stock you wish to monitor with a price alert.

|

Johnson & Johnson

NYSE:JNJ

|

US |

|

Berkshire Hathaway Inc

NYSE:BRK.A

|

US |

|

Bank of America Corp

NYSE:BAC

|

US |

|

Mastercard Inc

NYSE:MA

|

US |

|

UnitedHealth Group Inc

NYSE:UNH

|

US |

|

Exxon Mobil Corp

NYSE:XOM

|

US |

|

Pfizer Inc

NYSE:PFE

|

US |

|

Palantir Technologies Inc

NYSE:PLTR

|

US |

|

Nike Inc

NYSE:NKE

|

US |

|

Visa Inc

NYSE:V

|

US |

|

Alibaba Group Holding Ltd

NYSE:BABA

|

CN |

|

JPMorgan Chase & Co

NYSE:JPM

|

US |

|

Coca-Cola Co

NYSE:KO

|

US |

|

Walmart Inc

NYSE:WMT

|

US |

|

Verizon Communications Inc

NYSE:VZ

|

US |

|

Chevron Corp

NYSE:CVX

|

US |

This alert will be permanently deleted.

Q3-2024 Earnings Call

AI Summary

Earnings Call on Oct 31, 2024

Revenue Growth: Nebius Group's revenue grew 2.7x compared to the previous quarter, reflecting rapid expansion in its core AI infrastructure business.

Strong Cash Position: The company reported cash and cash equivalents of around $2.3 billion as of September 30, 2024.

Accelerated CapEx: Capital expenditures reached approximately $400 million for the first nine months, with Q4 CapEx planned to exceed that as Nebius ramps up investments in GPUs and data centers.

AI Infrastructure Expansion: Plans include tripling capacity at the Finnish data center, launching a new colocation facility in Paris, and deploying over 20,000 GPUs by year-end.

2025 ARR Guidance: Nebius maintains a $500 million to $1 billion ARR guidance range for 2025, with potential to exceed the midpoint if a share buyback is not required.

NVIDIA Partnership: The company has a longstanding relationship with NVIDIA, is well-positioned to secure next-generation GPUs, and expects to be among the first to deploy Blackwells in 2025.

Non-Core Businesses: Units like Toloka, Avride, and TripleTen showed strong growth, with Toloka revenue up 4x YoY and TripleTen student numbers up 3x YoY.

Global Expansion: Nebius is pushing into both Europe and the U.S., with a focus on building a global data center network and expanding its U.S. customer base.

Nebius reported rapid revenue growth, with Q3 revenue increasing 2.7x compared to the previous quarter. The company highlighted a strong cash position of $2.3 billion and ongoing investments to accelerate growth, particularly in AI infrastructure. Capital expenditures for the first nine months were around $400 million, with further increases expected in Q4.

Nebius is focused on scaling its AI infrastructure rapidly by securing new data center plots, stable power supplies, and the latest GPUs. The company is tripling capacity at its Finnish data center, has launched a new colocation facility in Paris, and plans further expansion, including in the U.S. They expect to have deployed more than 20,000 GPUs by year-end and are actively pursuing greenfield, build-to-suit, and colocation strategies to meet demand.

Recent product launches include a cloud computing platform purpose-built for AI, offering flexibility and performance through hourly GPU time sales, and the Nebius AI Studio, a self-service inference platform using a token-based model for access to generative AI models. These offerings target a broad customer base, from enterprises to AI developers.

Nebius has a long-standing partnership with NVIDIA and is an official cloud and OEM partner. The team expressed confidence in their ability to secure future generations of NVIDIA GPUs, including Blackwells, and expects timely shipments for new hardware in 2025.

GPU pricing has stabilized for current generations like H100s, though new chips like Blackwells will command a premium. Most current customer contracts are under one year due to the market's preference for short-term commitments on older chips, but longer-term contracts are expected as Blackwells are deployed. Nebius is positioning itself as a flexible provider, offering both reserved and on-demand models.

Management highlighted greater efficiency than peers due to their full-stack approach (data centers, hardware, and software). While specific gross margins weren't disclosed, returns are described as solid, with payback periods for older GPUs around two years and expected to be shorter for newer hardware.

The company retained its 2025 ARR guidance of $500 million to $1 billion. If a share buyback is not pursued, Nebius anticipates surpassing the midpoint of this range by reallocating more capital to AI infrastructure. Growth is expected to be driven by capacity expansion, secured GPU supply, and strong demand visibility.

Nebius is building a global data center footprint, starting in Europe with facilities in Finland and Paris and actively expanding into the U.S. The customer base is increasingly international, with significant growth expected in the U.S. and further global expansion under consideration.

Hello, everyone, and welcome to the Nebius Group Third Quarter 2024 Earnings Call, our first results call since we are back to Nasdaq. My name is [ Julia Baum Gardner ], and I represent the Investor Relations team. You can find our earnings release published on our IR website earlier today.

Now let me quickly walk you through the safe harbor statement. Various remarks that we make during the call regarding our financial performance and operations may be considered forward-looking, and such statements involve a number of risks and uncertainties that could cause actual results to differ materially. For more information, please refer to Risk Factors section on our most recent annual report on Form 20-F filed with the SEC. You can find full forward-looking statement in our press release.

During the call, we'll be referring to certain non-GAAP financial measures. You can find a reconciliation of non-GAAP to GAAP measures in the earnings release we published today.

With that, let me turn the call over to our host, Tom Blackwell, our Chief Communications Officer.

Thanks very much, Julia, and hello to everyone. So let me quickly introduce our other speakers that we have on the line today. So I'm pleased to have here with me in San Francisco this morning, our CEO, Arkady Volozh; and Chief Business Officer, Roman Chernin. And also joining us from our office in Amsterdam, we have Ophir Nave, our COO; Andrey Korolenko, our Chief Product and Infrastructure Officer; and our CFO, Ron Jacobs.

So you've probably seen we put out quite a lot of material on our business a couple of weeks ago ahead of the resumption of trading on Nasdaq. And you've hopefully had a chance to have a quick look at our Q3 results release this morning. So some of you have actually already sent your questions, we thank you. And for those who haven't, you can submit any further questions via the special Q&A tab below at any point during our call, and we'll get to them.

So my suggestion is that we keep our opening remarks relatively brief to make sure we have plenty of time for Q&A. And so on that note, let me hand straight over to Arkady.

Thank you, Tom, and welcome, everyone. I'm very excited to be having this first earnings call since our resumption of trading on Nasdaq almost 2 weeks ago.

Let me briefly highlight our key points to set the scene for today's discussion. Our ambition is to build one of the world's largest AI infrastructure companies. This entails building data centers, providing AI compute infrastructure and a wide range of value-added services to the global AI industry. We have a proven track record with significant expertise in running data centers with hundreds of megawatts of power loads. So we know what we're doing here. We're already pushing full steam ahead. We're securing plots for new data centers, ensuring we have stable power supplies, confirming orders for the latest GPUs, while also launching new software and other value-added services, addressing the news and the needs of the AI industry. In short, we're working hard to rapidly put in place the infrastructure that will underpin our future success.

Let me turn briefly to today's financial and operating results before we go to Q&A. First, I will start with our core infrastructure business. As you can see, we are growing rapidly. Revenue grew -- sorry, revenue grew 2.7x compared to the previous quarter. We have a strong cash position. Cash and cash equivalents as of September 30 stood at around USD 2.3 billion. Capital expenditures totaled around USD 400 (sic) [ USD 400 million ] for the first 9 months of 2024. And looking forward, we anticipate capital expenditures in the fourth quarter to exceed this amount as we plan to accelerate investments in GPU procurement and data center capacity expansion. This includes tripling capacity of our existing data center in Finland. We also recently announced a new colocation data center facility in Paris, with more to come and to be announced very soon.

We expect to have deployed more than 20,000 GPUs by the end of this year. But really, we're just warming up, as you understand. While our financial performance has been strong, what's even more important at this stage is what we have been doing on the product side. In the last quarter, we introduced a number of strategic product developments. For example, we launched the first cloud computing platform built from scratch specifically for the age of AI. This platform offers increased flexibility and performance and will help us to expand further our customer base. This model assumes selling GPU by -- GPU time by hours. And now customers can buy both managed services and self-service with the latest H200 GPUs.

We also launched our Nebius AI Studio, a high-performance, cost-efficient self-service inference platform for users of foundational models and AI application developers. This allows businesses of all sizes, big and small, to use generative AI quickly and easily. Here, we provide a full-stack solution and use another business model. We sell access to gen AI models by tokens. Outside of our core AI infrastructure business, our other businesses also performed well. Toloka offers solutions that provide high-quality expert data at scale for the gen AI industry, and they grew revenue around 4x year-over-year.

Avride is one of the most experienced teams developing autonomous driving technology, both for self-driving cars and delivery robots. Earlier this month, Avride announced a multiyear strategic partnership with Uber in the U.S. And we also just rolled out our new generation of delivery robots, offering improved energy efficiency and maneuverability.

TripleTen is a leading educational technology player. In the last quarter, they increased 3x year-over-year in number of students enrolled in [indiscernible] across the key markets, U.S. and Latin America.

In summary, we have been busy developing strong results, but this is just the start of our journey. The big opportunities are still to come. And with that, let me wrap up and hand back to -- over to Tom for the Q&A.

Thank you very much, Arkady. And so just a reminded to everyone that you can submit your questions through the Q&A tab function below. But we have a few questions that have come in already. So we'll get going. First question actually relates to the latest status on the buyback and whether that's something that's still under consideration. Ophir, can I suggest that you take that question?

Yes, sure. Thanks, Tom. This is actually a great question because it has a direct impact on our 2025 guidance. But first, maybe it will be a good idea just to take a step back and remind all of us where the idea of the buyback actually came from. After the divestment of our Russian business, we viewed potential buyback as an instrument to provide our legacy shareholders an opportunity to exit our business, especially in the absence of trading. And as we all know, at our latest AGM, our shareholders authorized a potential buyback within certain parameters. One of them is a maximum price of $10.5 per share, which represent the pro rata of the net cash proceeds of the divestment transaction at closing. It does not put any value whatsoever to the business that we are actually building.

Our shares resumed trading on Nasdaq about 2 weeks ago, and we are very happy to see the investors' interest in our story. We also see strong liquidity levels. We hope that this is a sign that our investors see the great opportunity in our business. And if this is the case and the market for our shares remains strong, actually a buyback may not be required to accomplish the idea for which it was originally planned. In this case, we may actually have the opportunity to allocate much more capital to our AI infrastructure and deliver our plans even faster. But let's try to put this into actual numbers.

As probably everyone knows, we originally provided a $500 million to $1 billion ARR guidance range for the year of 2025. In this scenario where buyback will, ultimately, will not be required, we actually estimate that we will be able to deliver above the midpoint of this range, the range of $500 million to $1 billion ARR. And we think that this is actually very exciting.

Great. Thank you very much for that, Ophir. So the next question is really around competition and sort of how we're seeing the market. And Arkady, I'll come to you on this. And specifically, the question is, so how does Nebius differentiate against the hyperscalers and the other private competitors in the GPU cloud space?

Yes. That's a great question because -- one second, I will -- so actually, the question is why we believe Nebius will be among the strongest leading independent GPU cloud providers, right?

Actually, yes.

Yes, yes. Actually I have usually several answers to this question. First, we provide full-stack solution which means that we build data centers, we build hardware, servers and racks inside the data centers. We build AI cloud platform on top of it, full cloud. And we build services, and we have expertise -- build services for those who build models and train these models and build applications, and we have our own expertise in this area. So we have full-stack solution. And this translates into better operational efficiency. We believe that our costs may be lower because of this. And our product portfolio is much stronger. So this is -- full-stack gives us the first block of differentiation. Then this is a platform -- the solution we're providing actually is a solution which was built from scratch in the last several months. This is the first full integrated solution for AI infrastructure built like a new without anything to support any unnecessary functions or old code. It's a brand new solution. And what is more important, it's basically targeted for the tasks of a very dynamic AI industry. So we offer much more flexible. We can offer better pricing and so on. And the third block of differentiation, I would say, comes from the fact that we have a very strong and a very good team. This team of people, more than 500 specialists, 400 AI specialists, specifically in cloud engineers who are ready to support our growth have experience building systems which are even larger than what we have today and have ambitions to build much larger systems. And this actually translates into faster time to market when we launch new products and better customer support and better understanding of our clients because we are the same, we are like them. So these are actually 3 major things which we see as our competitive advantage, full-stack solution, brand new, specifically tailored platform, which we just launched, and us, so the people and engineers who really understand the area.

Thank you very much, Arkady. So actually the next question is around our data center expansion strategy. So Andrey, I'll come to you on this. And specifically, how do we think about it overall? How do we think about geographic locations? And what are potential constraints in terms of rolling out the strategy?

Yes. Thanks, Tom. Hi, everyone. Andrey Korolenko speaking. So we have 3 ways to source the data center capacity. These are colocation. So just renting out someone else's data center. Build-to-suit projects is when someone else builds the data center on their side with their CapEx according to our design for the long lease contracts from ourselves. And the greenfield projects, when we build the data center from the scratch and operate it ourselves. Basically, our preference would be greenfield. This allows us to realize value from the full-stack approach and reach maximum efficiencies as the team have decades of experience operating the data centers, building, design and building and operating the data centers. But that's subject to the availability of the capital. And the greenfield projects are longer in terms of delivery times. So we're going to use all 3 of these sources of ways to get the capacity, but we view the collocations as shorter-term solutions, mostly speaking about the next few quarters while the other 2 will be [indiscernible]. But our first location, our first data center is in Finland, which we already commenced the capacity expansion for it. We actually are treating the capacity for the -- during the next year, maybe the first phase will be in mid next year and the last phase of that site will be later 2025. Also wanted to mention that it's fully capable of the liquid cooling technologies for the newer GPUs and the new trends in that area as well. In the midterm, we plan and actively engaging in build-to-suit arrangements. It's a less capital-intensive alternative to the greenfield, but still allows us to stick to our design and maintain most of our operational effectiveness, I would say, but still keeping us more flexible in terms of the -- of capital. Talking about the geographical locations. So the first -- the Finnish was our home base, the first data centers. We, a couple of months ago -- months ago, I believe, we announced the Paris location, which is kicking in, in operations as we speak. The next one will be announced in U.S. And looking forward, we will be building infrastructure both in Europe and in U.S. mostly. That's it, Tom. I think I covered it.

Okay. No, I think that's very good. Thank you very much, Andrey. And so just for people's reference, so Andrey referred to our Finnish data center. And there was a press release a few weeks ago with more specific detail around the expansion plans there. And there's also, was an announcement a few weeks ago about the Paris data center, if people want to refer to that with some more additional detail. So the next question is actually -- we've received a few questions around the NVIDIA partnership and relationship. So I'm going to combine them into one, if that's okay. So -- and actually, Andrey, maybe I can stick with you here. So first of all, the question is really what's the history as well as current status of the NVIDIA partnership and what that brings to Nebius. The current status of NVIDIA orders, shipments and as well as our ability to secure new GPU generations going forward, including the Blackwells. So let me give you that set of questions, if I can.

Yes. Thanks, Tom. So talking about our collaboration with NVIDIA, we have a long-term experienced team, actually has the long-term experience working with NVIDIA for more than a decade, building the GPU clusters and running them at a pretty significant scale. About the partnership, we are cloud and OEM partner, official cloud and NCP and OEM partner of NVIDIA that helps us to develop the data center design, the rack design to get all the advantages of the NVIDIA software part and just to collaborate on the both technical and business sides. About the future shipments, well, the GPU availability is always a tricky one, but I'm quite sure that we have a good track of shipments throughout this year. And we are feeling confident talking with NVIDIA that the next Q1, Q2 shipments of the new generations are secured for us.

Okay. Thank you, Andrey. So Roman, I'll come to you. So we've had a couple of questions around GPU pricing and sort of generally how we see the evolution of GPU pricing, and overall, how we think about the sustainability of pricing in light of the regular flow of new generation launches and so on. So Roman, perhaps I can come to you to address that.

Yes. Thank you, Tom. So talking about the pricing, I think it's important to talk in respect to generations. So for the next generation GB200s Blackwells next year, I think the pricing is not fully set, but we can expect that there will be the premium and margin as it normally happens at the beginning of generation. For Hoppers, which is current active generation, everybody is talking about it, I would say that for H100s, the most popular model today, the pricing came to some like stable situation at the moment. So there was obviously a very high premium, which now reduced, but it's still the prices that led, have the healthy unit economics. And we also have H200s, which is not such a big volumes in the market. And for them, we see the prices also like pretty healthy. Important to mention here that we were kind of a little bit late on Hoppers generation comparing to some of our competitors. And next year, most of our fleet will be with Blackwells, that kind of give us the advantage to benefit from being in the beginning of this generation without the large legacy. And looking forward, I would say that it's normal that when the generations of chips are shifting, the prices kind of go down. But what we do is what is our angle, we invest a lot on the software layer to kind of prolong the life cycle of the previous generation when we can provide the service to the customer, not as a raw compute of some chips, but as a service, like Arkady mentioned that we launched our token as a service platform for inference, and there could be down the road, new services that kind of hide the specific models of the chips under the hood, and we can extract the value for the customers.

Great. Thank you very much, Roman. And actually, Roman, I'll stay with you because the next question is really around the clients as of today and what are the sort of typical contract terms and durations for current customers and how we think that will evolve over time…

Yes. So like just to remind, we started less than, like less than a year ago. Our first commercial customer started in Q4 2023. As of now, we have something like 40 managed clients. And it's important to mention that the customer base is pretty much diversified, we don't have any single dominating client. If we talk about the customer profile, most of our customers are AI-centric, like gen AI developers, like people for whom AI is bread and butter. And we also see that there is step-by-step growing our exposure to more enterprise customers. Talking about the contracts, as of today, since the fleet is H100s, most of our customers -- most of our contracts are under 1 year. Like this is -- the reason for that, that on the market, customers don't feel comfortable to commit for H100s for more than a year, which is natural. Again, I want to remind that next year, the fleet will be mostly around Blackwells, and we expect that the contracts for Blackwells in the next year will be, again, coming to more long-term arrangements. And also important to say that for H200s, still Hoppers, but we see a lot of discussions in the pipeline of like 1-, 2-year contract, like healthy price, healthy duration. We also have a lot of on-demand customers. And this is honestly the part of our strategy to position us as the most flexible GPU cloud provider as of today. So we really want our customers to benefit from the platform that let them combine reserves and pay-as-you-go, like be more flexible in their capacity planning because this is a real pain of the market that we address. And again, so with the Blackwells, we anticipate the duration of contracts will significantly grow. And when the most of the fleet of chips will shift to Blackwells, the mixed contract structure in the portfolio will be like shifted to more long term.

Great. Thank you very much, Roman. So actually, the next question is about Arkady. But since we have Arkady on the line, I'll let him field it. But the question was, "How engaged is Arkady with the business today? And what are his plans for the future?" I guess, with respect to Nebius.

How much engaged? Well, I'm fully engaged. If you ask me fairly, I may be too much engaged. But seriously, it's a new, totally new venture and it's a new start-up. If you look at the team and the enthusiasm and the mood, it's just a nice feeling to be there, but it's not just a startup. It's a very unusual start-up. It's new projects on one hand. On the other hand, we start with a huge amount of resources. It's not just the team, it's also the platform which we have, hardware and software and a lot of capital. And this is a huge opportunity to build something really, really big here in the AI infrastructure space, which will go for long and will be visible. So I am engaged. It's a very interesting new game, new start-up. And aside of just pure enthusiasm, just to remind you that I personally made a big bet on this, I would say, I don't know, something like maybe 90% of my personal wealth is in this company. So I truly believe that we're building something big and serious here. And we have great prospects. And we are very much enthused and we want to make this thing going.

Thank you very much, Arkady. So actually, Ophir, I'll come to you. We have a question around sort of margins and unit economics. So specifically, a question is, can you elaborate on Nebius' gross margin and unit economics for the GPUs? And what are the returns on invested capital or payback expectations that we see?

These are actually 3 questions.

Yes, sorry.

So let's start with the unit economics. Our unit economics is actually different from most of the reference points that investors have. We see that investors compare us to data center providers on the one hand or, plain vanilla, GPU as a service players, bare metals as we call them on the other hand. Most of them are actually -- most of these players are actually sitting on very long-term contracts with fixed unit economics margins. We are neither of these 2. We are actually a truly full-stack provider. So what does it mean? Regarding our unit economics, obviously we do not disclose specific numbers, but let me try and share with you how we think about this. To start with, we believe we are more efficient than our peers. Why? We have efficient data centers. We have in-house designed hardware, and we have full-stack capability. But furthermore, we also create value from our core GPU cloud. This is already part of our unit economy, and we anticipate that this part will grow. But this is actually another benefit, it allows us to serve a wider customer base. And we believe that this customer base will drive the demand in this space in the future. So these are a few words about the unit economics on our potential returns. Our returns are already solid, but we are yet to get access to the new generations of GPUs for which we see a huge demand. And with our intention to continue developing our software stack and value-added services, we believe that we will be able to even improve our returns on the invested capital. And I think that you also asked about the payback expectations. So the payback period obviously depends on the generation of the GPUs, can be somewhere around 2 years for the old ones and much less for the new ones. So it's a little bit, I would say, premature to talk about it. But we will be probably in a much better position to share specifics once we deploy and sell our first GB200s. Fortunately, we expect to be among the first to do so. So hopefully, it will not be too long. I hope that answered the questions.

No, I think you did. There was indeed a lot in the question, but well unpacked. Thank you. So Roman, let me come back to you. So there's a question which is basically in the context of our 2025 guidance range. Can you help us to sort of understand what is already contractually secured versus elements that might still be uncertain? So I guess this relates to things like power supply, GPUs, procurement, clients, contracts, et cetera.

Yes. Thank you, Tom. I think there is like really 3 lines of the things, so that determine the growth. So one is DC capacity, data center capacities, and like access to the power is a part of it. And as Andrey shared before, we secured the growth for our core facility in Finland. And we are now in advanced discussions to add more colocation capacities in the U.S. Like I expect that until the end of the year, we'll disclose more. On GPU side, we already mentioned today also that there is a long-standing relations with NVIDIA and that led us be in the first line to bring the state-of-the-art new NVIDIA Blackwell platform to the customers, and we expect to double down on it in 2025. And client-wise, like demand side, I think we have quite a good visibility for the end of this year. And for the next year, our forecast is mostly based on the capacity available. We believe that it's still more supply-driven model because given our current size and given the total addressable market, we don't see real limitations to secure enough demand during the next year. So that's, again, 3 lines to grow, you need enough DC space, you need to secure GPU supply and you need demand. So we feel kind of on 3 lines pretty comfortable.

No, that's great. And I suppose I could point out that we have one Blackwell already secured, but definitely more to come in 2025. So the next question is around what are the remaining links back into Russia following the divestments. So actually probably I can take that one. I think the simple answer is that, well, the remaining links are no longer there. In reality, when thinking about it, actually the sort of the separation started back in early 2022 when we embarked on the process to do the divestments. But when that divestment came to a completion in July of this year, that sort of severed all of the remaining links at that stage. So just to kind of put that into some context what that means. So we don't have any assets in Russia. We don't have any revenue in Russia. We don't have any employees in Russia. And at a sort of technological data level and so on, all of the sort of -- the links are broken at this stage. So effectively it was a clean and comprehensive break. So I think it's also just -- it's probably good just to point out, so this is not just our own assessment or self-assessment here. So first of all, the divestment transaction, which I referred to, which was the largest corporate exit from Russia since the start of the war, this had broad support from Western regulators and so on. And also the resumption of trading on Nasdaq, which -- that followed a fairly extensive review process. And eventually, they concluded that we were in full sort of compliance of the listing criteria, which is, in other words, the Russian exits was considered to be gone at that point. So Russia chapter is over, but we look forward to the next chapter and it's one that we're very excited about. So next question, Ophir, I'll come back to you. So it's how long will your cash balances last? And what are your investment plans for 2016 and beyond? And will you need to raise more external capital beyond that and how and in what form? And again, apologies, it's a few questions packed into one.

And I guess that you meant 2026, not 2016.

Yes, it's actually 2026, indeed, yes.

We have no plans for 2016 actually. But for the future, it's clear our first priority by far is our CapEx investments into our core Nebius business by far. So for this reason, our cash efficiency period at the end of the day is basically a function of how aggressive we want to be in our investment in data centers and in GPUs. Now given the strong demand for our product and services that we see in the market, our plan is actually to invest aggressively. But on the other hand, it is important for us, and we actually make sure that we maintain sufficient liquidity to cover our cash burn for a reasonable period of time. But I think that it's worth mentioning in this context as we actually previously disclosed that we are exploring together with Goldman Sachs, our financial advisor, different strategic options to even accelerate our investment in our AI infrastructure. And actually, our public status provide us with an access to wide range of instruments and options. So to summarize, we plan to move aggressively into the AI infrastructure at the same time to keep sufficient cash for our burn rates and while exploring other potential options to actually even move faster in our plans.

That's great. Thank you, Ophir. So actually, the next question is around the ARR guidance range of $500 million to $1 billion for next year. I think, Ophir, actually, to some degree, covered this in his first answer, but let me just kind of add a couple of points on top of that. So again, that guidance took into a range of possible scenarios, including timing of the GB200 delivery, but also actually the key factor here is availability of capital. So there's a couple of things to think about here. So as of now, we have around $2 billion on the balance sheet. And the question is how much of this we can allocate to CapEx. And so here, there are a couple of points. We've made reference to a potential withholding tax that we may have to cover. And so depending on how the discussions with the Dutch tax authorities go, there could be a reasonable share of that, that has been allocated for a potential tax payment that could be reallocated towards CapEx. And there's also -- as Ophir pointed out earlier, I think the key point here is that there could be a scenario when we don't have any impact from a potential buyback, which would mean that we have an opportunity to allocate a lot more capital to the AI infrastructure CapEx and deliver on our plans even faster. But again, I think Ophir covered that well in his first answer. So in that scenario where we're able to reallocate, we estimate that we'll be able to deliver above the midpoint of the $500 million to $1 billion ARR guidance range that we gave for year-end 2025. Okay. Very good. So moving on. I think the next question actually, Ophir, if I can come back to you. So it's around really what's the strategy for the, let's say, noncore business units? And are there any monetization plans for the portfolio companies. So again, just for clarity here, we're probably talking about some Avride, TripleTen and Toloka, so.

Yes. So first, we truly believe that each one of these businesses is among the leaders in its field. Each one of them has great prospects. That said, as we said time and again, the majority of our focus and capital is being allocated towards our core AI infrastructure business. And for this reason, we are very [ flexible ] in our strategic development of our other businesses. So it's one example, this may include with respect to some of the businesses, joining forces with strategic partners or seeking external investors, et cetera. So again, we truly believe that these businesses will do great, will be profitable to us, but our focus -- our main focus both from a business [indiscernible] and capital is in our core AI infrastructure business.

Very good. Thank you. So actually, next question is, which I can take, which is around sort of our thinking around investor relations going forward. And so specifically, some points, do we expect to see broker research coming out soon? And just more generally, what are the plans around investor relations over the coming months to sort of as we reintroduce our company to the market? So indeed we had a fairly lengthy sort of slightly strange period where we were dark while we were finishing the divestments and putting in place all of the infrastructure for this new company. And we were very pleased to get back on to Nasdaq, and that sort of sparks very much a new chapter and a return to, I would say, slightly more normal life, exciting, but maybe a bit more normal. So definitely we're starting to reengage with the various banks in terms of trying to sort of reinitiate some sell-side coverage, research coverage. That's a process that's underway right now, and so expect to see more coming out sort of over the coming months. Get back into a more normalized sort of IR period, quarterly reporting going forward, we're going to be look out for us at conferences, investor conferences and so on sort of over the coming months. And so yes, so I think -- apologies for the sort of the blackout period for some time, but we're back, and we'll be doing that. We also -- we had a lot of inbound interest from investors, and so we're going to be doing a lot of one-on-one calls also over the coming weeks. So feel free to get in touch with all of us, and we'll engage as much as we can. It's a busy time, but we'll find time. So that's on the IR side. Ophir, I'll come to you perhaps. So there's a question about the ClickHouse stake which I remind people we have an approximately 28% stake in ClickHouse. Question is, can we give more details on that business, how it's performing? And what is the revenue of [ Clickstart ]? And is there a plan to go public? So probably not all of that we can address, but Ophir perhaps you can comment on that to the extent that we can.

Yes. Actually, it's very simple. We treat our stake at ClickHouse as a perfect investment. So first of all, we don't have any immediate plans for it. We -- right now, we continue to own it. Now as a minority shareholder, we are not in a position to provide any more details on the business, not about its projections, plans, business trends, et cetera. It's not for us to say. But we can say and we are actually very happy to say that to our understanding, the business is well-regarded by partners and other market participants. So we are very happy about that.

Thank you, Ophir. So actually the next question, Andrey, I'll come to you on this one. It's around actually power supply and power access. And do we think that we have sufficient access to support sort of future computing needs and GPU requirements. Yes, Andrey Korolenko?

Yes. Thanks, Tom. Well, I would say that short term, as I just mentioned, short term, we are relying on the rented capacity plus the expansion of our Finnish data center and then switching to the greenfield and build-to-suit projects, again subject to the available capital. But generally, midterm, we don't see problems for the growth, to support the growth even if we are talking just growing the magnitude. So the only challenging times would be -- might be the next 3, 4 quarters. But I truly believe that we are in a very good position not to be blocked by the central capacity availability. That's in short.

Great. And I thank you, Andrey. So actually, the next question is about the U.S. And so what expansion plans do you have in the United States? Do you already have corporate customers in the U.S.A.? And generally, how do you see development there? Roman, maybe I can come to you to have a crack at that.

Yes. Thank you. It's super-relevant since we are in San Francisco now. I think that we can say that we have a very great focus on U.S. already. We see that organically, many of our customers coming from U.S. We don't have yet so much awareness here, we just started, but already the big portion of our customers coming from U.S. And in the previous questions, we said that the big part of our customers are AI-focused companies, it's like obviously many of them here. So we're developing the team. We're planning to expand capacity here, as Andre mentioned already. So like, yes, I think it will be a super-important part of the game.

Fantastic. And actually, next question flips to the other side of the pond to Europe. And so Arkady, maybe I can come to you on this one, which is what's the rationale for building infrastructure currently in Europe? And just generally, how do we sort of see the opportunity in Europe?

Well, first of all, from a corporate side, we are a Dutch company traded on Nasdaq, so a European company historically. Then after this big split, we inherited a big data center, which is in Finland, which is, as you know, also in Europe, which are now tripling and will be a pretty big facility. We also recently launched Paris. Paris is also in Europe. And we're discussing several greenfield projects, which will be also in particular in Europe, not only. So Europe definitely have some advantages for us in terms of competition and easy access to stable power supplies and cheap power supplies. But at the same time, although we started our infrastructure in Europe, we are building a global business. First of all, we have global customers. More than half of our customers today, I think, come outside of Europe, U.S., first of all. And going forward, we definitely are looking just actually we act to expand our geography to become a really global AI infrastructure provider and in particular in the U.S., and there will be some news following very soon about our expansions here in terms of infrastructure, but also we already announced that we follow our customers. And we opened several offices in U.S., in San Francisco and Dallas, and New York is coming soon. It's mostly sales and services offices. Again, the infrastructure are moving to U.S., not only Europe, and customer services moving to U.S. But again, it's not only just Europe, not only just U.S., it will be a global network of data centers and a global service provider. We are looking into other regions as well pretty actively. So yes.

Great. Sorry, I didn't mean to cut you off, that's…

Yes, no, no, that's actually -- that's it, yes. So just watch our coming announcements, which will come very soon.

Very good. Very good. So actually, Roman, maybe I can come to you. There's a question about how you see customer needs and sort of use cases evolving. So obviously this is a rapidly developing industry. So any color that you can add around that would be great.

Yes. This is actually a brilliant question. I love to answer. So I think that the main -- like the most significant shift that we see now is a lot of inference scenarios coming. So if some time ago most of GPU hours were consumed for the large training jobs, so like developing the products, now we see that a lot of compute is consumed to serving customers, which is like we consider as a great development of the market, and we're moving forward in general as an industry. And for us, I believe it's -- this shift is also super-important because since we are very much in the software platform, when it comes to more complex scenarios, we can create much more value for our customers. Another thing to mention is that the number of scenarios, like the verticals are also diversifying. So we see, for example, a lot of customers coming from life science, biotech, health tech. We see a lot of interest in robotics, other -- like video generation now like is [ vlog ] mining. So we think that there will be a lot of sectors and niches where AI is penetrating. And our mission here is to support those people who build the products with the infrastructure and move -- develop the platform together with them.

Great. And actually, maybe, Roman, let me stay with you because there's kind of a follow-on from this one, which is sort of how you think about the evolution of the customer base going forward sort of over time.

Yes. So like I think that mostly we already covered it. So -- and there are a few dimensions like one, the structure of the contracts. We said that down the road with the new generation of chips, we see that it will be, again, like more long-term contracts, again, large training jobs will come and so on. And then like on a scenario perspective, we see that shift to inference is the most important. And from the market sectors perspective, I think that, again, we see more and more diversified portfolio scenarios and types of kind of -- types of tasks that people address with AI. And I think, again, the next big thing is when AI will start to be adopted more in enterprises, like the market will go from AI -- like now the most of the customers are AI native. And then we'll see a lot of adoption in enterprises. So it will be important shift maybe during the next year.

Okay. Very good. So Andrey, maybe I'll come to you. There's a specific question here about do you have the capability to support heterogeneous GPUs?

Shortly, yes. So, first of all, I would like just to mention that we are following the demand that we see on the market. And as the market develops, we will be developing -- we are developing what we can provide. So at this point of time, NVIDIA is the state-of-art solution. But in our R&D, we have a lot of different options in development. And we'll just follow the demand and we'll try to deliver the best possible solution to the customers.

Great. So we're kind of coming up at time here. There were a few questions remaining that were around this of GPUs, how many we have in operation now, how many we anticipate to have by year-end and what the outlook is going into 2025. I'll just -- I'll refer people to the various materials that we disclosed a couple of weeks ago because we go into quite a bit of detail around some of the specific capacity numbers there. So I think you'll find the answers to those questions there. But if you have any follow-ups, don't hesitate to come to us. But otherwise, we're coming up at 6:00 a.m. in San Francisco time. So let me thank everybody, management, and all of our investors, potential, currents for joining us. We're very happy to be back into the public markets. And as I think Arkady said, this is really just the beginning. So we're very excited about continuing the discussion with all of you. So with that, thank you very much, and I wish everybody a good remaining day, afternoon, evening, night.

Thank you.